The $1.50 Insurance Policy: Why I used S3 to back up my private cloud storage.

Published on Jan 13, 2026 • Read on Substack

Building an automated, client-side encrypted disaster vault on AWS.

In my previous post, I argued for building a private cloud to escape the subscription gravity of modern cloud providers. The premise was simple: complete ownership, zero rent, and a server that lives in my house.

Then the hardware failed.

Specifically, my Raspberry Pi OS was corrupted due to a power incident. While the NVMe data persisted, the panic of that “what if” moment forced a pivot. I realized my anti-cloud server needed a cloud backup.

The challenge was paradoxical: How do you back up a self-hosted server to the cloud without validating the very business models you’re trying to escape?

The Requirement: A Digital Doomsday Vault

I wasn’t looking for “sync.” I didn’t need a “Photos App” or collaborative editing. I needed a digital doomsday vault. A place to dump encrypted blobs of data that I would hopefully never touch again.

The engineering constraints were strict:

- Cold Storage: Retrieval time is irrelevant. If disaster strikes, a 12-hour recovery window is acceptable.

- Client-Side Encrypted: The provider acts as a blind bit bucket. They hold the bits; I hold the keys.

- Commodity Pricing: Paying SaaS premiums ($10/mo) for raw storage is a failure state.

The Landscape

There is a fundamental difference between Google Drive (SaaS) and AWS S3 (Infrastructure).

- Google Drive: Indexes your photos, scans for facial recognition, and trains models. You are the product.

- AWS S3: Stores an

encrypted-blob-12345. You are a tenant.

There are three main options for “mainstream” cold storage, and my thoughts are below.

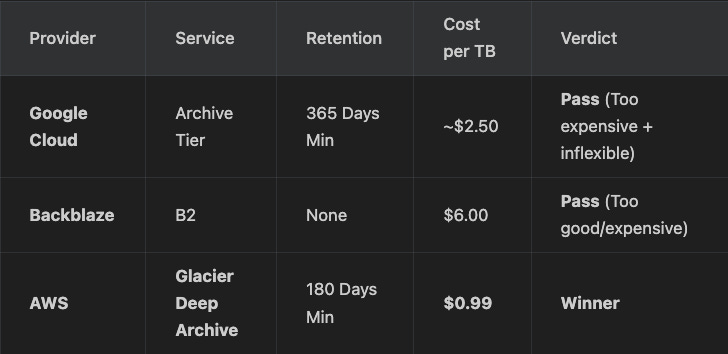

Backblaze B2 is the darling of the self-hosting community, and for good reason: it’s simple. But it serves “Hot” storage. Paying $6/mo for data I might touch once a year, if that, is inefficient resource allocation.

AWS S3 Glacier Deep Archive is the extreme end of the storage spectrum. It is essentially a tape library API. It is hostile to quick retrieval, requiring a 12-hour thawing process.

S3 also had some very interesting bucket lifecycle options besides deep archive. Particularly, “Glacier Flexible Retrieval” piqued my interest. I ultimately skipped it. Deep Archive's predictable pricing was better.

Google Cloud Archive was disqualified simply on price. At ~$2.50/TB, it is more than double the cost of AWS. When the goal is strictly commodity pricing, a 150% premium is a non-starter.

For a disaster recovery vault, AWS was the pick. That hostility is a feature, not a bug.

Engineering The Solution

Implementing this required solving three specific problems: Privacy, Request Overhead, and Safety.

1. Privacy (Client-Side Encryption)

The architecture uses rclone with crypt remotes. The flow is unidirectional:

- Pack: The Pi compresses the filesystem.

- Encrypt: Rclone encrypts the stream using a local private key.

- Push: The encrypted stream is sent to S3.

2. The “One Zip” Optimization

Here is where the “cheap” cloud gets expensive. AWS charges almost nothing for storage ($0.00099/GB), but they charge significantly for state changes.

Deep Archive PUT Requests cost $0.05 per 1,000 uploads.

My Nextcloud instance contains roughly 100,000 small files (photos, receipts, logs).

- Naive Approach: upload 100,000 files = $5.00 per backup run.

- Optimized Approach: tar -czf the entire directory into 1 file = $0.00005 per backup run. This was something I went back and forth with, as the cost is worth enough to follow through with, but this adds an extra layer for retrieval.

3. The Kill Switch (IAM Safety)

Self-hosting shouldn’t induce financial anxiety. I wanted to verify that a script loop couldn’t accidentally bankrupt me.

I utilized AWS Budgets for a kill switch.

- Budget Limit: $3.00 / month.

- Trigger: If forecasted spend > Limit.

- Action: An automated IAM Role attaches a Deny All policy to the backup user.

If the script goes rogue, the credentials burn themselves. Paranoia stems from this Reddit post

The Verdict

I haven’t abandoned self-hosting; I’ve fortified it.

My Raspberry Pi remains the sovereign home of my data. It runs cloudflared for secure

ingress and a hardware watchdog for stability. But now, for the price of a pack of gum, it has an

infinite-retention, off-site disaster recovery plan.

I still own the data. I just rent the shelf space.

The magic.

Thanks for reading. Back to the blog.